The parser is quite efficient if the notion `syntactic head' implies that much syntactic information is shared between the head of a phrase and its mother. In this case the prediction step in the algorithm will be much better at `predicting' the head of the phrase. If on the other hand the notion `head' does not imply such feature percolations, then the parser must predict the head randomly from the input string as no top-down information is available.

The efficiency of the parser can be improved by common logic programming and parsing techniques. Firstly, it is possible to compile the grammar rules, lexical entries and parser a bit further by (un)folding. Secondly it is possible to integrate well-formed and ill-formed subgoal tables in the parser, following the technique described by [15]. The usefulness of this technique strongly depends on the actual grammars that are being used.

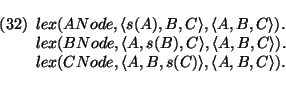

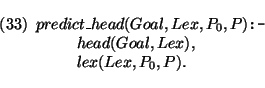

It is possible to use a more clever indexing of lexical entries. Firstly, it is possible to extract the elements from the lexicon that can be used to parse some given sentence, before the parser starts properly. That is, for each sentence the parser first selects those lexical entries that possibly could be used in the analysis of that sentence. The parsing algorithm proper then only takes these lexical entries into account when it searches the lexicon in the prediction step.

Furthermore, we can get rid of the subbag/3 predicate in the predict clause. Given the precompilation step mentioned above, we can use a slightly different representation of bags where the elements of the bag are not explicitly mentioned, but are implicitly represented by the position in the bag. To make this work we also allow bags where elements occur zero times. For example, given the sentence `a b b c a a', the corresponding bag will simply be:

The guide is instantiated as follows. The in-part will simply be the bag representation shown above. More precisely:

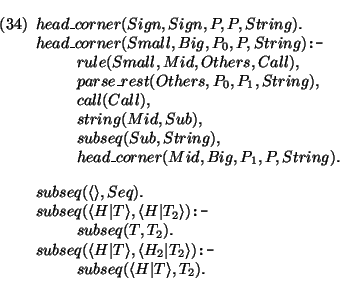

In some grammars the string operations that are defined are not only monotonic with respect to the words they dominate, but also with respect to the order constraints that are defined between these words (`order-monotonic'). For example in Reape's sequence union operation the linear precedence constraints that are defined between elements of a daughter are by definition part of the linear precedence constraints of the mother. Note though that the analysis of verb second in the foregoing section uses a string operation that does not satisfy this restriction. For grammars that do satisfy this restriction it is possible to extend the top-down prediction possibilities by the incorporation of an extra clause in the `head_corner' predicate which will check that the phrase that has been analysed up to that point can become a substring of the top string. To this purpose, the input string is percolated through the parser as an extra argument. Each time a rule has applied the parser checks whether the string derived up to that point can be a subsequence of the complete string. This is achieved using the subseq/2 predicate. In that case the revised `head_corner' clause looks as follows:

In some cases it is very useful to delay the constraint which defines the operations on strings until the parser is finished. For example, if the constraints are disjunctive then it may be useful to wait as long as possible before a choice in a certain direction is to be made. The important information for the parser is percolated anyway through the bag of words; the actual order of the words has (usually) not much influence on other choices of the parser. Hence a lot of uninteresting non determinism (from the parser's point of view) can thus be delayed. In practice this increased the efficiency of the parser for some grammars by a factor 3. Clearly this technique is incompatible with the improvement suggested above for order-monotonic grammars.