[19] proposes a grammatical formalism called Head

Grammar (HG). HG is a slightly more powerful formalism than

context-free grammar. The extra power is available through head

wrapping operations. A head wrapping operation manipulates strings

which contain a distinguished element (its head). Such headed strings are a pair of an ordinary string, and an index (the

pointer to the head), for example

![]() w1w2w3w4, 3

w1w2w3w4, 3![]() is

the string

w1w2w3w4 whose head is w3. Ordinary grammar rules

define operations on such strings. Such an operation takes n headed

strings as its arguments and returns a headed string. A simple example

is the operation which takes two headed strings and concatenates the

first one to the left of the second one, and where the head of the

second one is the head of the result (this shows that the operations

subsume ordinary concatenation). The rule is labelled LC2 by Pollard:

is

the string

w1w2w3w4 whose head is w3. Ordinary grammar rules

define operations on such strings. Such an operation takes n headed

strings as its arguments and returns a headed string. A simple example

is the operation which takes two headed strings and concatenates the

first one to the left of the second one, and where the head of the

second one is the head of the result (this shows that the operations

subsume ordinary concatenation). The rule is labelled LC2 by Pollard:

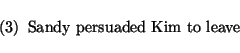

The motivation Pollard presents for extending context-free grammars (in fact, GPSG), is of a linguistic nature. Especially so-called discontinuous constituencies can be handled by HG whereas they constitute typical puzzles for GPSG. Apart from the above mentioned subject-auxiliary inversion he discusses the analysis of `transitive verb phrases' based on [1]. The idea is that in sentences such as

`persuaded' + `to leave' form a (VP) constituent, which then combines

with the NP object ('Kim') by a wrapping operation.

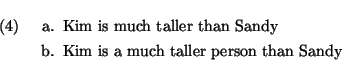

Yet another example of the use of head-wrapping in English are the analyses of the following sentences.

where in the first two cases `taller than Sandy' is a constituent, and

in the latter examples `easy to please' is a constituent.

Breton and Irish are VSO languages, for which it has been claimed that the V and the O form a constituent. Such an analysis is readily available using head wrapping, thus providing a non-transformational account of [16].

Finally, Pollard also provides a wrapping analysis of Dutch cross-serial dependencies.

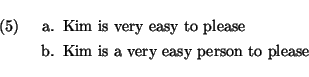

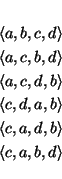

[11] discusses an extenstion of DCG in order to analyse the Australian free word-order language `Guugu Yimidhirr'. In ordinary DCG a category is associated with a pair indicating which location the constituent occupies. Johnson proposes that constituents in the extended version of DCG be associated with a set of such pairs. A constituent thus `occupies' a set of continuous locations. The following is a sentence of Guugu Yimidhirr:

In this sentence, the discontinuous constituent `Yarraga-aga-mu-n ...biiba-ngun' (boy's father)

is associated with the set of locations:

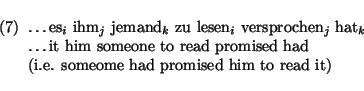

[21], and [22] discuss an operation

called sequence union to analyze discontinuous constituents. The

sequence union of two sequences s1 and s2 is the sequence s3

iff each of the elements in s1 and s2 occur in s3, and

moreover, the originial order of the elements in s1 and s2 is

preserved. For example, the sequence union of the sequences

![]() a, b

a, b![]() and

and

![]() c, d

c, d![]() is any of the sequences:

is any of the sequences:

Reape presents an HPSG-style grammar [20] for German and Dutch which uses the sequence union relation on word-order domains. The grammar handles several word-order phenomena in German and Dutch. Word-order domains are sequences of signs. The phonology of a sign is the concatenation of the phonology of the elements of its word-order domain. In `rules', the word-order domain of the mother sign is defined in terms of the word-order domains of its daughter signs. For example, in the ordinary case the word-order domain of the mother simply consists of its daughter signs. However, in specific cases it is also possible that the word-order domain of the mother is the sequence union of the word-order domains of its daughters.

The following German example by Reape clarifies the approach, where I use indices to indicate to which verb an object belongs.

Figure 4 shows a parse tree of this sentences where the nodes

of the derivation tree are labelled by the string associated with that

node. Note that strings are defined with respect to word-order

domains. Sequence union is defined on such domains. The strings of the

derivation tree are thus only indirectly related through the

corresponding word-order domains.